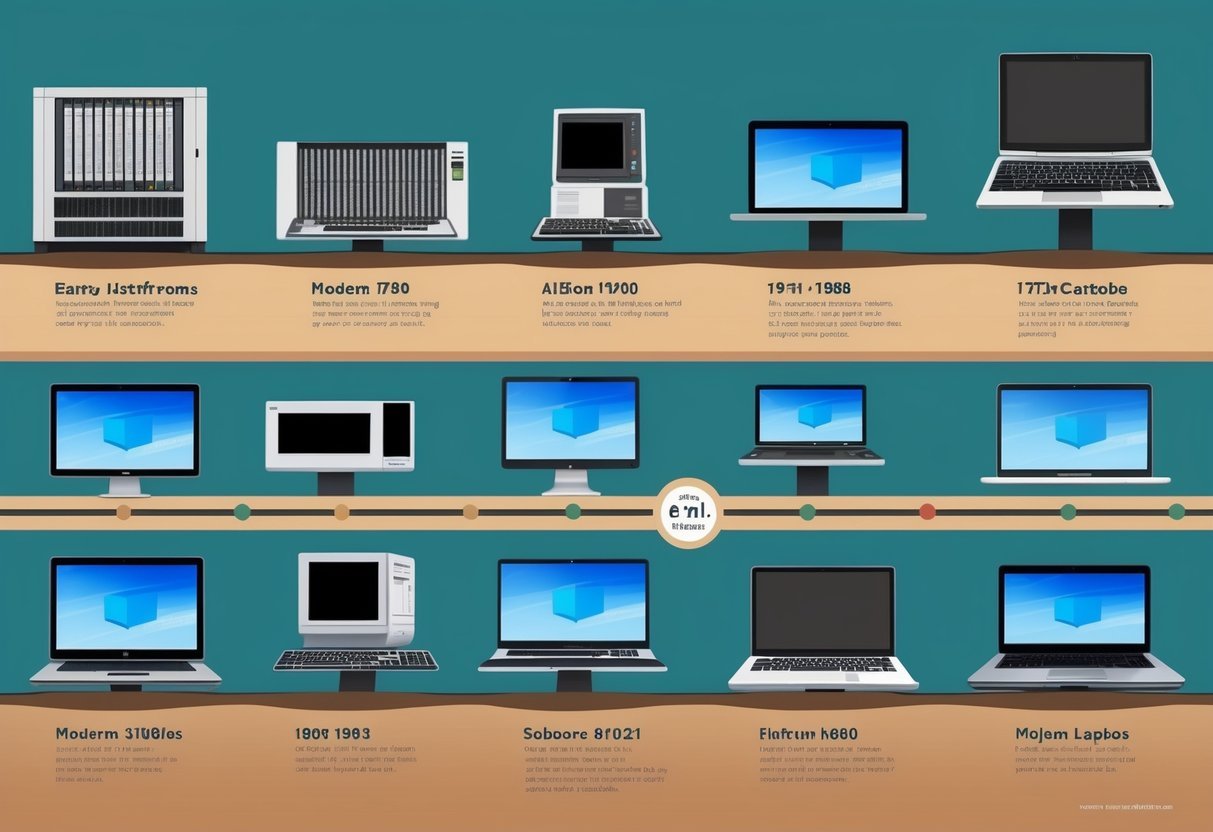

Computers have come a long way since their early days. From simple counting tools to complex machines that fit in our pockets, the journey of computers is quite amazing.

The history of computers goes back over 200 years. It all started with basic tools like the abacus and slide rule. These helped people do math faster. Then came bigger machines that could do more complex tasks. The mid-20th century saw the first electronic computers being built, marking a big leap in computing power.

Today, computers are everywhere. They help us work, play, and stay connected. From smartphones to supercomputers, these devices have changed how we live. The story of how we got here is full of clever ideas and hard work by many smart people.

Precursors to Modern Computers

The path to modern computers began with simple tools for counting and calculation. These early devices laid the groundwork for more complex machines that could process information.

Early Calculation Tools

One of the oldest calculation tools is the abacus. This ancient device uses beads on rods to represent numbers and perform basic math. It’s still used in some parts of the world today.

Another important tool was the slide rule. This ruler-like device helped people do complex math quickly. It was widely used by engineers and scientists until electronic calculators came along.

Logarithms were also key in early computing. These mathematical tools made it easier to do hard calculations by hand. They were often used with slide rules to solve tricky math problems.

Pioneering Mechanical Devices

As math got more complex, people invented machines to help. One early example was the Pascaline, created by Blaise Pascal in 1642. This gear-based device could add and subtract numbers.

Charles Babbage took things further with his Difference Engine in the 1800s. While never fully built in his lifetime, it was designed to do more complex math automatically.

These mechanical devices were big steps toward modern computers. They showed that machines could do math and process information, paving the way for the electronic computers we use today.

The Evolution of Computer Concepts

The modern computer came from big ideas by smart people long ago. These thinkers laid the groundwork for today’s technology.

From Charles Babbage to Ada Lovelace

Charles Babbage dreamed up early computers in the 1800s. He made plans for the Difference Engine, a machine to do math. Later, he thought of the Analytical Engine. This was closer to our idea of computers today.

Ada Lovelace worked with Babbage. She saw the Analytical Engine could do more than just math. Lovelace wrote what many call the first computer program. Her ideas showed these machines could be general-purpose.

Babbage’s engines used gears and levers. But they paved the way for electronic computers. Lovelace’s work hinted at the power of computer code.

Early Theorists and Their Impact

Other thinkers helped shape computer concepts too. George Boole created Boolean logic in the mid-1800s. This became key for how computers work with true and false values.

Alan Turing came up with the idea of a universal machine in the 1930s. His work laid the base for how we think about computer programs today.

John von Neumann helped design computer architecture in the 1940s. His ideas are still used in many computers now.

These early theorists saw the potential of computers. They helped turn mechanical ideas into the digital world we know.

The Advent of Electronic Computing

Electronic computers emerged in the 1940s, revolutionizing calculation and data processing. These machines used electrical circuits and vacuum tubes to perform complex operations much faster than mechanical devices.

The World War II Era

During World War II, the need for faster calculations drove computer development. The first electronic digital computer, ENIAC, was built at the University of Pennsylvania. John Mauchly and J. Presper Eckert designed ENIAC to calculate artillery firing tables for the U.S. Army.

In Britain, the Colossus computer helped crack German codes. These early computers used vacuum tubes and could process data much quicker than previous mechanical calculators.

John von Neumann made key contributions to computer architecture during this time. His ideas shaped how computers store and process information using binary digits.

Post-War Developments and Mainframes

After the war, computer technology advanced rapidly. The EDSAC, built at Cambridge University in 1949, was one of the first stored-program computers. It could save instructions in memory, making it more flexible than earlier machines.

In 1951, the UNIVAC I became the first commercial computer sold in the United States. It found use in business and government applications.

Mainframe computers grew popular in the 1950s and 1960s. These large, powerful machines could handle complex calculations for scientific and business purposes. They used transistors instead of vacuum tubes, making them more reliable and efficient.

Computer programming languages also developed during this time, making it easier for people to write software and use these new electronic marvels.

The Rise of Personal Computing

Personal computers changed how people worked and played at home. They made computing power available to everyone, not just big companies. This sparked a tech revolution.

Home Computers Revolution

The personal computer revolution began in the late 1970s. Companies like Apple, Tandy, and Commodore led the way. They made computers small and cheap enough for homes.

In 1977, the Apple II hit the market. It was easy to use and had color graphics. Many people bought it for work and fun.

Other popular home computers followed. The Commodore PET and TRS-80 were big hits. They let people play games, write, and do math at home.

By the 1980s, more companies joined in. IBM launched its PC in 1981. It became a standard for business use. Hewlett-Packard also made popular home computers.

User-Friendly Interfaces and Devices

As computers got more common, they needed to be easier to use. This led to big changes in how people worked with them.

Apple’s Macintosh, launched in 1984, was a game-changer. It had a graphical user interface (GUI) and a mouse. This made it much simpler to use than text-based systems.

The GUI used icons and windows instead of text commands. People could point and click instead of typing complex instructions. This made computers accessible to more people.

Laptops also appeared in the 1980s. They let people take their work anywhere. Early models were bulky, but they got smaller and more powerful over time.

These advances helped make computers a key part of daily life for millions of people.

Advancements in Computing Hardware

Computing hardware has changed a lot over time. New inventions made computers faster and smaller. These changes helped computers become a big part of our lives.

From Vacuum Tubes to Silicon

The first computers used vacuum tubes. These were big glass tubes that got very hot. They took up a lot of space and used a lot of power.

In the 1950s, scientists made a big breakthrough. They invented the transistor. Transistors were much smaller than vacuum tubes. They also used less power and didn’t break as often.

The next big step was the integrated circuit. This put many transistors on one small chip. It made computers even smaller and faster.

In the 1970s, the microprocessor came along. This was like a tiny computer on a chip. It could do many tasks and fit in your hand.

One famous early computer was the ENIAC. It used vacuum tubes and filled a whole room. Today’s computers are much smaller but way more powerful.

Computer chips keep getting better. They can now hold billions of tiny parts. This lets our phones and laptops do amazing things.

Software and Programming Languages

Computer software and programming languages are key to making computers work. They tell machines what to do and how to do it. These tools have grown a lot over time.

The Creation of Coding Languages

Early programming languages were hard to use. They used complex math symbols. In the 1950s, things changed. New languages made coding easier.

FORTRAN came out in 1957. It was great for math and science. COBOL appeared in 1959. It helped with business tasks.

These languages let more people write computer programs. They didn’t need to know machine code anymore.

As time went on, even better languages came out. They made writing software faster and simpler.

Operating Systems and Software Development

Operating systems are big, important programs. They help run computers and other software.

UNIX was a big deal. It came out in 1969. UNIX made it easier for people to use computers. It also helped create the internet.

Software tools got better too. They helped coders write programs faster. IDEs (Integrated Development Environments) became popular. These tools put everything a coder needs in one place.

Today, there are many ways to make software. Web apps, mobile apps, and PC programs all need different tools. Coding has become easier, but also more complex.

Digital Storage and Memory

Computer storage and memory have changed a lot over the years. They’ve gotten much faster and can hold way more information than ever before.

The Concept of Data Storage

Data storage is how computers keep information for later use. In the early days, computers used punch cards and magnetic tape. These could only hold a little bit of data.

As time went on, new ways to store data came about. Floppy disks were popular in the 1980s and 1990s. They could hold more data and were easy to carry around.

Hard drives became the main way to store lots of data. They use spinning disks with magnetic coatings. Hard drives can hold tons of files, music, and videos.

Today, many people use solid-state drives (SSDs). These have no moving parts and are super fast.

Evolution of Memory Technologies

Memory is different from storage. It’s where computers keep data they’re using right now.

Early computers used magnetic core memory. This was made of tiny magnetic rings on wires. Engineers made it slow by today’s standards.

RAM (Random Access Memory) came next. It’s much faster than core memory. RAM lets computers quickly access data they need.

Over time, RAM has gotten much faster and can hold more data. This helps computers run more complex programs.

Now, some computers use new types of memory like 3D XPoint. These are even faster than regular RAM.

Modern Computing and Its Impact

Computers have evolved rapidly, becoming smaller yet more powerful. They now shape nearly every aspect of our lives. From smartphones to quantum machines, computing continues to transform how we work, communicate, and solve problems.

Multipurpose Devices and Cloud Computing

Smartphones and tablets have changed how we use computers. These pocket-sized devices let us access information, play games, and stay connected anywhere. The iPhone and iPad sparked a mobile revolution, putting powerful computers in our hands.

Cloud computing has also made a big impact. It lets us store files online and use software without installing it. This makes it easy to work from any device and share data with others.

Many people now use their phones or tablets as their main computer. These devices can do almost everything a desktop can, but they’re more portable and user-friendly.

Artificial Intelligence and Machine Learning

AI and machine learning are changing how computers work. These technologies help computers learn from data and make decisions on their own. They power things like voice assistants, recommendation systems, and self-driving cars.

AI is getting better at tasks like:

- Understanding speech and text

- Recognizing images and faces

- Predicting trends and outcomes

- Playing complex games

Machine learning lets computers improve without being explicitly programmed. This helps them tackle problems that were once too complex for machines.

As AI keeps advancing, it’s changing many industries. It’s making healthcare more precise, finance more efficient, and entertainment more personalized.

Quantum Computing Horizons

Quantum computers are a new frontier in computing. They use the strange rules of quantum physics to solve certain problems much faster than regular computers.

While still in early stages, quantum computers could revolutionize fields like:

- Drug discovery

- Financial modeling

- Weather prediction

- Cryptography

These machines work with quantum bits or “qubits” instead of regular bits. This lets them process huge amounts of data in ways classical computers can’t.

Big tech companies and research labs are racing to build practical quantum computers. As they improve, they might tackle problems we once thought impossible to solve.

Connectivity and the Internet

The internet changed how computers talk to each other. It made sharing info easy and quick. Mobile tech and smart devices made the web part of daily life.

Networking and the Birth of the Internet

The internet started in the 1960s as a U.S. military project. Scientists wanted computers to share data even if some got attacked. This led to ARPANET, the first computer network.

In the 1970s, more networks popped up. They needed a way to talk to each other. So, experts made rules called TCP/IP. These rules let different networks chat.

The 1990s saw the internet go public. Web browsers made it easy to surf. Soon, millions of people were online.

The Mobile Revolution and IoT

Phones got smart in the 2000s. They could go online anywhere. This kicked off the mobile web era. People could check email, browse, and use apps on the go.

Wi-Fi made getting online at home and in public simple. No wires needed! It let laptops, tablets, and phones connect fast.

The Internet of Things (IoT) came next. It’s not just computers and phones online now. TVs, fridges, and even light bulbs can connect. They make homes and cities smarter.

Mobile networks keep getting faster. 5G is super quick and can handle tons of devices. It’s perfect for self-driving cars and virtual reality.

Trailblazers in the Computing World

The world of computing has been shaped by brilliant minds who dared to dream big. These pioneers laid the groundwork for the digital age we live in today.

Influential Inventors and Visionaries

Alan Turing stands out as a key figure in early computing. His work on code-breaking machines during World War II paved the way for modern computers. Turing also came up with the idea of the Turing Test to check if machines can think like humans.

Konrad Zuse built the first working programmable computer in the 1930s. His Z1 machine was the first step towards today’s digital computers. Herman Hollerith’s punch card system for the 1890 US Census sped up data processing and led to the creation of IBM.

Vannevar Bush dreamed up the Memex, an early version of hypertext systems we use today. His ideas influenced how we organize and find information on computers.

Contributors to Modern Computer Science

Douglas Engelbart changed how we use computers. He invented the computer mouse and showed how computers could do more than just number crunching. His famous 1968 demo introduced many ideas we still use today.

Ada Lovelace wrote the first computer program back in the 1800s. She saw the potential for computers to do more than just math. Her work laid the foundation for software development.

Grace Hopper created the first compiler, which turns human-readable code into machine language. This made programming much easier and opened up computing to more people.

These trailblazers, along with many others, helped shape the digital world we know today. Their ideas and inventions continue to influence how we use and think about computers.